Exercise 6: Data Normalization, Geocoding, and Error Assessment

Goal:

The purpose of this lab was to become familiar with normalizing data so that it could be processed, geocoding addresses, and assessing errors in acquired data. We were provided data for all of the current frac sand mines in the state of Wisconsin. This data was provided by the Wisconsin DNR and had not been normalized or altered so that it could be successfully geocoded. Once we normalized the data, it was geocoded to begin to spatially analyze where the frac sand mines were located. We also had to assess errors within the data, as our classmates geocoding may have differed slightly from ours, or they may have normalized the data in a different manner.

Methods:

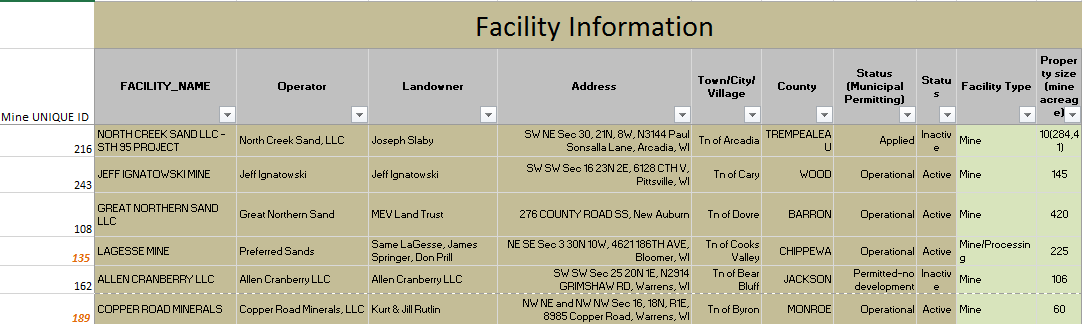

The first step was to normalize the acquired data. Many of the addresses for the mines did not provide street addresses. A number of the mines were listed in the Public Land Survey System (PLSS), and had no addresses at all. The addresses of the mines were also not divided up the same way, by address, city, and county. In an attempt to normalize the data, the web GIS servers for each county were opened and the parcel information was gathered. If not address was available for the parcel being located, a proximal parcel was chosen if it provided an access road to the desired parcel. Figures 1 and 2 show a comparison between the original data and the data after normalization. Once all of the data were normalized, they were ready to be geocoded.

After normalizing the data, the excel file was ready to import into ArcMap to be referenced for the geocoding process. In this instance, all of the data were normalized in a manner that made geocoding possible, allowing for a match for all data points. To attempt to compare our results with those of our classmates, all of the other geocoded data had to be merged. Figure 3 shows a map of west-central Wisconsin and the results of my geocoding and the geocoding of the rest of the class.

A query was developed to select all of the mines geocoded by our classmates with the same Mine UNIQUE ID field as our own. After these were selected a new feature class was created to make comparing the selected mines of our classmates to our own. This allowed us to utilize the Point Distance tool in the ArcMap toolbox to calculate the distance from out point to the surrounding mines. After projecting the geocoded results, this tool was run and a table was produced. If the Distance field listed a distance of 0 meters, there was a perfect match and that was the same mine. If there was a difference then it meant that there was a difference in where the same mine was geocoded based on normalization differences, or the distance was measured to another mine. Figure 4 shows the table with feature ID classes listed and the distances between the input and near feature ID.

Discussion:

There are a number of possible explanations for the reasons behind the variation in distances from geocoding. These error types are listed in an assigned class reading Lo (2003). I did not notice any gross errors in this data (errors caused by blunders or mistakes). If the entire class had been trained on standardization prior to the normalization of the data, an argument would be correct that there would be gross errors. However, because the purpose of the exercise was to learn proper methods of how standardizing should be conducted, no standardization method was set, and therefore there was not really any "gross errors." There were definitely systematic errors in the data caused by human bias when determining where the mine should be placed, if a manual placement had to be assigned.

Inherent errors in the data are also a main source of the error in the geocoded data. According to Lo (2003), there is inherent error in digitizing and attribute data input. Both of these were conducted in this lab and both had inherent error within them. Each dataset was normalized by a different student, which meant that there were as many different methods as there were students for normalizing the data. This lead to different ways that things were divided, recorded, and listed. Operation errors were also very much a part of this lab, for many of the same reasons as listed previously. There is error that will come with user bias and different methods that nothing will alter but prestandardization of the normalization process. Even after standardizing, it is hard to remove all the error possibilities.

In order to determine which points are correct and which points are off, we would need a perfect dataset. We would need addresses for every mine in a format that leaves little to no room for error in normalization of the dataset. To determine the most accurate points on our map without a perfect dataset, we would need to come together as a class and decide which points should be counted as the correct location. Then we would be able to determine the distance of our points from the point that was deemed correct.

Conclusion:

Data normalization is something that needs to be determined before the start of a project. In order to ensure that data melds in a manner that makes it usable by all, strict guidelines must be put in place prior to the data management process. If this is not done, the chances that a dataset will be managed differently from another are much higher.

References Cited:

Lo, C., & Yeung, A. (2003). Data Quality and Data Standards. In Concepts and Techniques in Geographic Information Systems (pp. 104-132). Pearson Prentice Hall.

No comments:

Post a Comment